A Conversation With Blood & Clay Film Director

A Q&A With Martin Rahmlow, Director of Blood & Clay Short Film

Blood & Clay (https://bloodandclay.com/bnc.php) is an animated short movie (~20 min) co-directed by Martin Rahmlow and Albert Radl. The movie tells the story of the orphan Lizbeth, who tries to escape her nemesis Director Kosswick and his Golem. The movie is produced in a hybrid stop-motion/cgi technique. The film is created by a three-member team – Martin Rahmlow, Albert Radl and Onni Pohl.

We spoke with Martin Rahmlow, film director, to get known how they are using iPi Soft (https://www.ipisoft.com/) technology in their production pipeline.

Q. Please tell a few words about yourself and the team.

All three of us met 20 years ago at film school: Filmakademie Baden-Württemberg. Albert supported me during the production of my first stop-motion movie ‘Jimmy’ (https://vimeo.com/manage/videos/66549889) and I did the VFX for Alberts ‘Prinz Ratte’ movie (https://www.amazon.de/Prinz-Ratte-Albert-Radl/dp/B07HM1WMGD). Blood & Clay (https://bloodandclay.com/bnc.php) is the first movie all three of us collaborating. Albert and I are the directors of the movie and Onni focuses on the technical part.

Q. How did the team first hear about iPiSoft Mocap? And, how long has the team been using it?

In May 2015 Onni was assigned setting up real life 3d characters for an advertisement for Mercedes Benz Sprinter (production: Infected). It was a freeze moment at a festival. There was a life shooting, but all the background people were made with poses that were extracted from tracking data made with iPi. A college of Onni, Fabian Schaper, had proposed this concept, as he had a Kinect Cam and appropriate notebook.

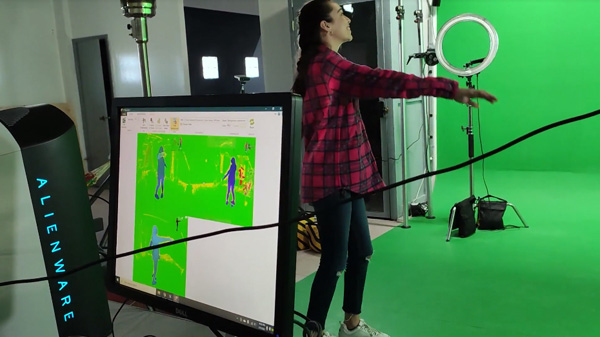

A Year ago, we discussed this possibility with our team and after trying the software, we decided, that it could help us with our Blood & Clay, as we have human like characters and a lot of animation. We did a few tests in the beginning but had a serious recording session (with an actress and our two directors) just in May. We have to process and clean the data and will have another session for some missing details.

Q. What is the storyline for the film?

The movie tells the story of the orphan Lizbeth, who tries to escape her nemesis Director Kosswick und his Golem.

Q. Please describe briefly your production pipeline, and the role of iPi Mocap in the pipeline.

- We use Prism as production pipeline and scripted little add-ons for our project

- Texturing and detailing is done with Substance and ZBrush

- Modelling and rigging is done in Maya

- Characters get an individual (simplified) mocap rig

- The sets are scratch-built and painted miniatures (scale 1:4) – then scanned and transferred into 3d assets

- Layout version of the film (animatic) may contain raw parts of the first mocap session

- During the animation phase, we plan to use our recorded animation (and loops, that are created from it) as a basis for all possible body movements

- We use Ragdoll to simulate cloth and hair and YETI for fur and hair FX

- For final rendering we’re considering several options. Either going the classic path tracing route like Arnold or rendering with a game engine like Unity or Unreal

Q. How long has the team been working on the film? What were the overall technical challenges that the team faced and how iPi Mocap helps to meet them?

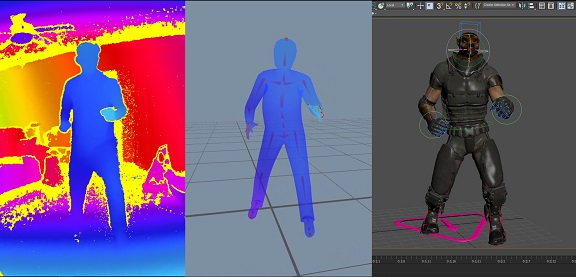

We started with production in 2020. Since it will be a 20 min short, we have a lot of animation with 3 very different, but (partly) human characters. The feature of iPi to load an individual rig for the export gives us the possibility to fit the recording to the character at export time perfectly. Eventually all scenes with intensive human body movement, that are on the ground (not hanging or dangling) are planned to get a basis animation for body movement with head tracking from iPi – sometimes we use separate hands tracking. Fingers and face will be animated separately.

We had a first technical test in January. It took some hours until we found the perfect way to calibrate our two cams. We did our recording session in May, using 2 Kinect v2 cams and 2 JoyCon controllers. We have another Azure Kinect, but unfortunately it can’t be used together with Kinect v2. Nevertheless, our calibration was good and the tests we did with our recordings turned out very promising.

At the moment we are experimenting with iPi to assist for a roto scene. Hands are shaping clay in this shot and we want to replace the hands and fingers with 3d hands. We got a close up of hands shaping clay and recorded with iPi from another perspective, using special trackers for the hands. We hope that this will give us a base for the roto to facilitate the finger roto-motion, as hands are always quite difficult to track, while moving the fingers a lot. Because we had limited space, we modified the T-Pose. Arms were stretched out to the front.

In our recording session, we recorded movements like crawling and somebody pushing himself forward with his arms, sitting on a rolling board, but we did not processed the recordings yet and are very curious if iPi will be able to help us with this task.

| Tweet |

|

|

||